From UPI to “UPI for AI”: What Interoperability Should Really Mean

India can build AI rails—but only if we stop pretending AI is as simple as payments.

From UPI to “UPI for AI”: What Interoperability Should Really Mean

When Nandan Nilekani and others invoke the idea of “AI like payment rails”, it immediately resonates in India. We have lived through the leapfrog: Aadhaar, UPI, FASTag, DigiLocker, ONDC. Open standards plus public infrastructure turned a fragmented payments landscape into something that “just works” across apps, banks and platforms.

It is tempting to imagine doing the same for artificial intelligence. Instead of a dozen isolated pilots and proprietary assistants, we dream of an AI ecosystem where models, data and services can be swapped and combined—a national network of interoperable intelligence, embedded into everyday workflows from agriculture to health.

But here’s the uncomfortable truth: you cannot copy-paste the UPI template onto AI. Safety, interoperability and accountability are still the right cornerstones—but enforcing them in AI is far more complex than clearing a fund transfer.

The question is not whether AI can be made interoperable. It is what kind of interoperability is realistic, and what India must standardise—and deliberately refuse to standardise—to get there.

Payments are simple. Intelligence is not.

UPI works because the thing being moved is simple: a rupee. A payment either succeeds or fails. Messages have a fixed format; reconciliation is deterministic; disputes are narrow in scope. Regulators can write crisp rules because behaviour is predictable and the state space is small.

AI is the opposite.

Probabilistic outputs. A model does not “succeed” or “fail” in binary terms. It produces a distribution of plausible answers, some of which may be subtly wrong, biased or harmful.

Context dependence. The same model output might be harmless in a classroom, dangerous in a medical triage, and politically sensitive in an election. Safety depends on who is using AI, for what, and with what authority.

Shifting frontier. Payment protocols evolve slowly; AI models change every few months. Any standard that tries to regulate the internal architecture of models will be obsolete before the ink dries.

No single unit of value. There is no AI equivalent of the rupee. “Translation”, “advice”, “summarisation”, “prediction” and “recommendation” are not uniform commodities.

This is why slogans like “UPI for AI” can be misleading. We don’t need a single AI platform. We need AI rails in a narrower, more technical sense: common protocols and governance at the boundaries, not uniformity inside the models.

What, exactly, should interoperate?

If we take the payment-rails metaphor seriously, interoperability for AI should mean three things:

Standard interfaces for common tasks.

A government department or startup should be able to call “translate”, “transcribe”, “summarise”, “retrieve over these documents” or “rank these options” through well-defined APIs, with explicit metadata: language, domain, risk level, user role, consent status.Replaceable models behind those interfaces.

A health platform in Odisha should be able to switch between multiple certified models—open-source, Indian, global—without rewriting its entire stack, just as a UPI app can change its partner bank.Shared safety and logging rules around the interface.

Every high-stakes AI “transaction” (a piece of advice that affects money, rights, health, liberty) should carry a traceable header: which model, which version, which institution authorised it, and what guardrails were applied.

Notice what is not being standardised: architecture, training recipe, number of parameters. The rail is the contract, not the brain behind it.

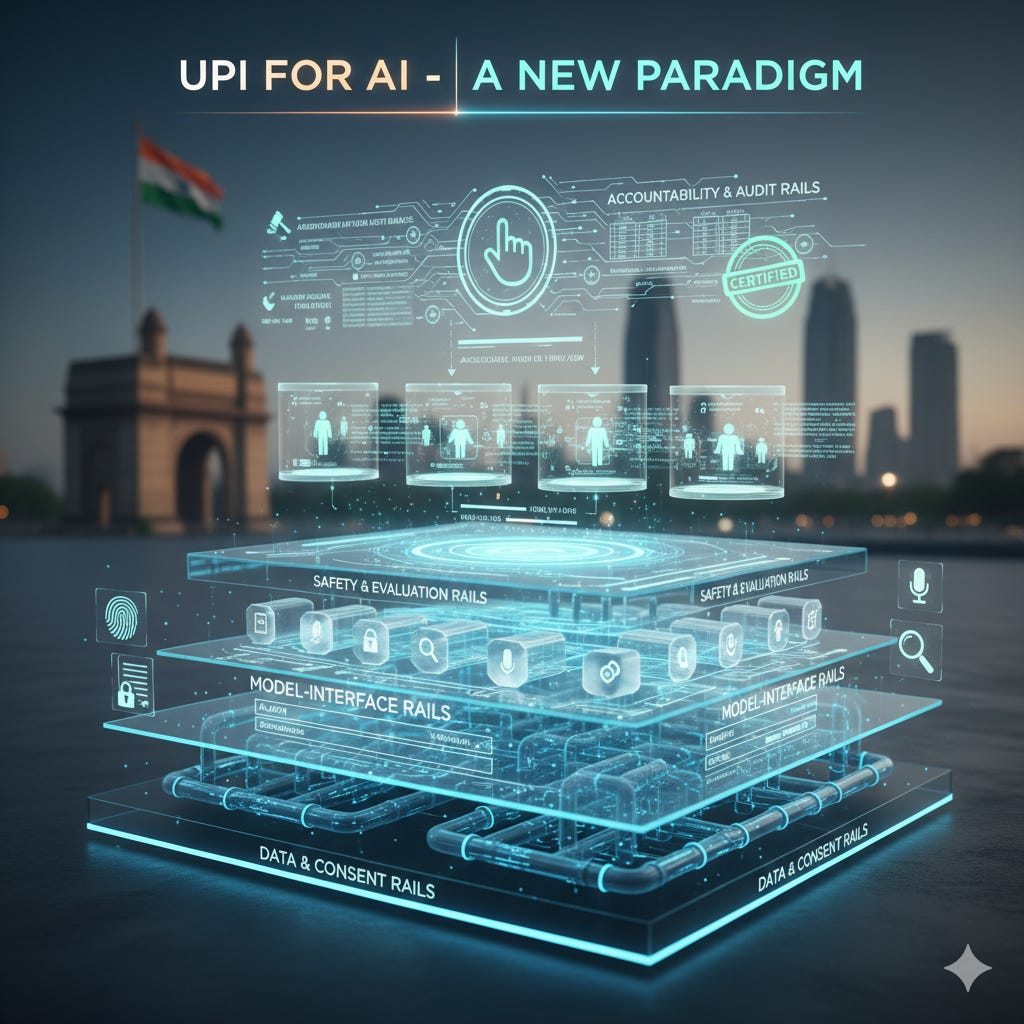

A layered “AI DPI” stack for India

India already has the building blocks. We are constructing data and consent layers via DPDP and sectoral data exchanges in health, agriculture and education. Bhashini and AI4Bharat are building linguistic infrastructure. What’s missing is a coherent stack view.

A realistic blueprint could have four layers:

Data & consent rails.

Standardised schemas and APIs for accessing government and institutional data with purpose limitation, consent, and revocation.

Sectoral stacks (health, agriculture, skilling) that define what can be shared, at what granularity, and under what protections.

Built-in anonymisation where individual identity is not required.

Model-interface rails.

For each class of task—translation, transcription, retrieval-QA, summarisation, content classification—define common request/response formats and mandatory metadata fields.

Require models to publish clear capability declarations: which languages, domains and risk classes they support, and which they do not.

Safety & evaluation rails.

National test suites for accuracy, robustness and bias across Indian languages and demographic groups, maintained by independent public labs.

A simple but powerful idea: risk classes. Low-risk tasks (e.g., entertainment chat, generic encyclopaedic questions) face lighter requirements. High-risk tasks (health, welfare eligibility, credit decisions, policing) require stronger testing, human-in-the-loop enforcement and deeper logging.

Periodic recertification as models, data and use-cases evolve.

Accountability & audit rails.

Clear legal separation of roles: model provider, service integrator, deploying institution.

Contracts and regulation that specify liability: bad training data vs unsafe deployment vs lack of human oversight.

Standardised “AI incident reporting” channels and the ability to suspend or downgrade a model’s certification if it repeatedly fails in the field.

If these layers exist, interoperability becomes meaningful: any model that speaks the protocol and passes the tests can plug into India’s AI ecosystem; any institution can switch models without being locked into a single vendor’s black box.

Making the cornerstones real

Stating that AI must be safe, interoperable and accountable is easy. Making it true requires using the right levers.

Public procurement as a forcing function.

If governments at Union and state level simply mandate that any AI solution they procure must comply with common interfaces, publish evaluation results and support logging and audit, the ecosystem will move. Vendors will align with standards not out of altruism, but to win contracts.Certification with teeth, not rubber stamps.

Certification should be tiered and enforceable. A model certified only for low-risk use cannot silently “graduate” into welfare decisions or policing just because an enthusiastic department plugged it in.Trusted “AI orchestrators”.

Instead of every department managing its own hodge-podge of APIs, India can license or create neutral orchestration layers—public or private—that route requests to certified models, enforce policies, and maintain logs, analogous in spirit to NPCI in payments.Human feedback from the last mile.

For India, the most important safety signal won’t come from elite benchmarks. It will come from farmers, ASHA workers, teachers, frontline officials saying: “This answer was wrong and harmful.” Reporting mechanisms must be simple, and responses must be visible—otherwise trust will evaporate.

The real opportunity

The rest of the world is still thinking about AI primarily as a consumer gadget or a productivity toy. India is forced, by necessity, to think of AI as infrastructure: something that must work on a feature phone in Marathi for a marginal farmer, not just on a flagship smartphone in English.

That constraint is a gift.

If we can show that AI can be:

swapped and combined like payment rails,

governed like public infrastructure, and

deployed safely in messy, low-resource, multilingual realities, then India’s contribution to AI will be larger than any single model or startup. We will have demonstrated that intelligence, like money, can flow through open rails—not as a runaway train, but as a public utility that raises the floor of everyday life.

The content has been generated using GenAI. Research and data are mine. This does not represent the views of the organization I am working in or the organizations I am associated with!