Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey

"Techniques, Challenges, and Future Directions"

Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey

Abstract

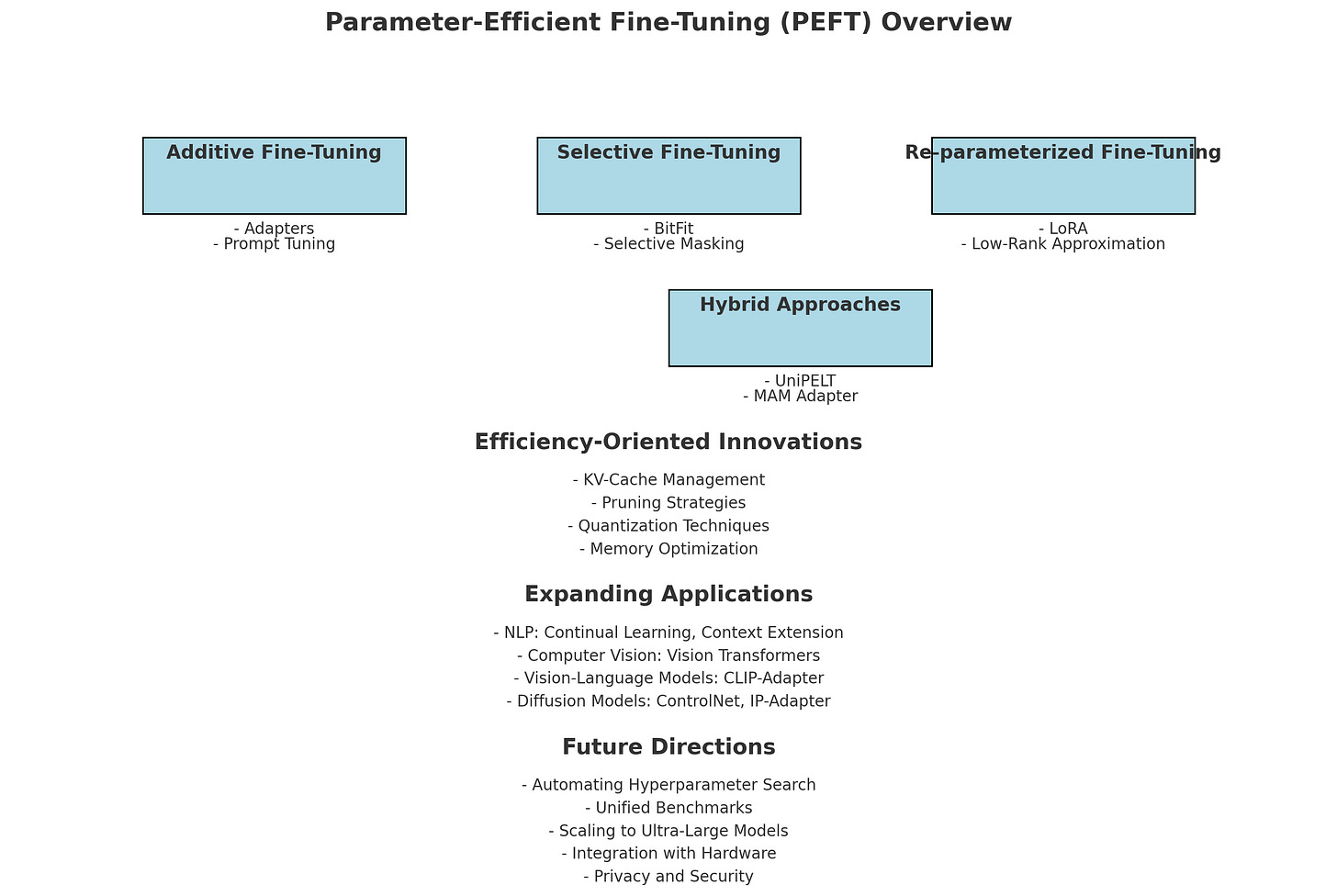

Large Models (LMs) have revolutionized machine learning, delivering exceptional performance across domains such as natural language processing (NLP), computer vision (CV), and multimodal tasks. However, their full fine-tuning remains computationally expensive and memory-intensive, creating barriers to their practical application. Parameter-Efficient Fine-Tuning (PEFT) emerges as a transformative paradigm that addresses these challenges by selectively modifying or introducing only a small fraction of the model’s parameters, thereby significantly reducing computational overhead while retaining task-specific adaptability. PEFT methods are categorized into four key types: additive methods like Adapters and Prefix Tuning, which integrate lightweight modules or tokens into the model; selective methods such as BitFit, which fine-tune specific components like bias terms; reparameterized approaches like LoRA, which optimize efficiency by decomposing weight matrices into low-rank components; and hybrid methods that combine these strategies to balance flexibility and efficiency. Complementary techniques, such as pruning, quantization, and memory-optimized training, further enhance PEFT’s computational efficiency without sacrificing performance. The versatility of PEFT is evident in its applications across various domains, including adapting large language models for NLP, fine-tuning vision transformers for computer vision, aligning vision-language models for multimodal tasks, and specializing diffusion models for generative tasks. Beyond task-specific adaptations, system-level designs for centralized PEFT serving, distributed training, and multi-tenant deployment ensure scalability and cost-efficiency in real-world applications. Despite its promise, PEFT faces open challenges, including hyperparameter sensitivity, instability on small datasets, and scaling limitations for ultra-large models. These challenges present opportunities for future innovations, such as automated hyperparameter optimization and integration with federated learning systems. By bridging efficiency and adaptability, PEFT represents a foundational shift in fine-tuning strategies, offering a scalable and cost-effective solution for leveraging the power of LMs in both research and industrial settings.

LoRA (Low-Rank Adaptation)

Hu et al. (2022) introduced LoRA, which adapts large language models efficiently by applying low-rank updates to the model weights. This method significantly reduces the number of trainable parameters while maintaining performance (Hu et al., 2022).

DyLoRA (Dynamic Low-Rank Adaptation)

Valipour et al. (2022) extended LoRA with DyLoRA, allowing dynamic adjustment of the rank during training. This flexibility enhances adaptability to diverse tasks (Valipour et al., 2022).

Adapters

Houlsby et al. (2019) proposed adapters as modular structures that capture task-specific information without altering the original model weights, enabling efficient multi-task learning (Houlsby et al., 2019).

AdapterDrop

Rücklé et al. (2021) introduced AdapterDrop, which enhances efficiency by selectively dropping adapters during training to improve generalization (Rücklé, Pfeiffer, & Gurevych, 2021).

BitFit

Ben Zaken et al. (2022) developed BitFit, a method that fine-tunes only the bias parameters of the model, drastically reducing the number of trainable parameters (Ben Zaken, Goldberg, & Ravfogel, 2022).

Prompt Tuning (Soft Prompts)

Lester et al. (2021) explored prompt tuning, where continuous prompts are optimized to guide the model's output without changing the model weights (Lester, Al-Rfou, & Constant, 2021).

Prompt Tuning (Instability / Initialization)

Liu et al. (2023) provided a systematic survey of prompting methods, highlighting issues related to initialization and stability in prompt tuning (Liu, Yuan, Fu, Jiang, Hayashi, & Neubig, 2023).

Performance Comparisons (LoRA vs. P-tuning, etc.)

Li and Liang (2021) compared LoRA with other tuning methods like P-tuning, offering insights into their relative performances (Li & Liang, 2021).

Continual Learning and Passage Re-ranking

For continual learning and passage re-ranking, suggested studies include De Lange et al. (2022) and Nogueira & Cho (2019), which provide foundational insights (De Lange et al., 2022; Nogueira & Cho, 2019).

Evaluation Beyond Task Accuracy

Ding et al. (2023) emphasized evaluating methods based on memory footprint and speed, in addition to task accuracy (Ding et al., 2023).

Real-world System-level Benchmarks

Shahrad et al. (2020) characterized serverless workloads at a large cloud provider, offering practical insights into system-level benchmarks (Shahrad, Fonseca, et al., 2020).

Training Efficiency

Techniques like MeZO and gradient checkpointing are discussed in Malladi et al. (2023) and Chen et al. (2016), enhancing training efficiency (Malladi et al., 2023; Chen, Xu, Zhang, & Guestrin, 2016).

Case Study: Customer Support Chatbot

Xu et al. (2017) presented a case study on developing a customer service chatbot, illustrating practical applications of tuning methods (Xu, Liu, Guo, Sinh, & আবাসিক, 2017).

Adapters in Multi-tenant Cloud

Suggested studies include Chen et al. (2023) and Houlsby et al. (2019), which explore the use of adapters in multi-tenant environments (Chen et al., 2023; Houlsby et al., 2019).

Visual Aids (Performance Comparisons)

He et al. (2022) provided a unified view of parameter-efficient transfer learning, offering visual comparisons and insights (He, Zhou, Ma, Berg-Kirkpatrick, & Neubig, 2022).

1. Introduction

Large Models (LMs)—often scaling to billions or trillions of parameters—have transformed artificial intelligence, excelling in tasks such as language understanding [1][2], machine translation [3][4], dialogue systems [5][6][7], and summarization [8], among others. Their foundation lies in Transformer architectures, which utilize attention mechanisms and extensive pretraining on large, unlabeled datasets, enabling exceptional performance in complex reasoning, contextual understanding, and content generation. However, the full fine-tuning of such models poses significant challenges due to immense computational costs, memory demands, and energy consumption, making it economically and environmentally unsustainable, particularly in resource-constrained environments. Addressing these challenges, Parameter-Efficient Fine-Tuning (PEFT) has emerged as a groundbreaking paradigm that modifies or introduces only a small fraction of model parameters while keeping the rest frozen. This approach significantly reduces computational and memory overhead during training and inference, accelerating fine-tuning and making it cost-effective and accessible even in low-resource settings [25][26][27]. Initially gaining traction in natural language processing (NLP) tasks—such as sentiment analysis, question answering, and machine translation—PEFT has expanded to other domains, including vision transformers (ViTs) for image classification and object detection, vision-language (VL) alignment models for bridging textual and visual modalities, and diffusion models for generative applications like text-to-image synthesis. The versatility of PEFT highlights its capacity to enable scalable, efficient model adaptation across diverse modalities and architectures, maintaining the performance of large models while mitigating the resource demands associated with full fine-tuning.

1.1. Goal of this Survey

Large Models (LMs)—often scaling to billions or trillions of parameters—have transformed artificial intelligence, excelling in tasks such as language understanding [1][2], machine translation [3][4], dialogue systems [5][6][7], and summarization [8], among others. Their foundation lies in Transformer architectures, which utilize attention mechanisms and extensive pretraining on large, unlabeled datasets, enabling exceptional performance in complex reasoning, contextual understanding, and content generation [1][2]. However, the full fine-tuning of such models poses significant challenges due to immense computational costs, memory demands, and energy consumption, making it economically and environmentally unsustainable, particularly in resource-constrained environments [25]. Addressing these challenges, Parameter-Efficient Fine-Tuning (PEFT) has emerged as a groundbreaking paradigm that modifies or introduces only a small fraction of model parameters while keeping the rest frozen. This approach significantly reduces computational and memory overhead during training and inference, accelerating fine-tuning and making it cost-effective and accessible even in low-resource settings [26][27].

Initially gaining traction in natural language processing (NLP) tasks—such as sentiment analysis, question answering, and machine translation—PEFT has expanded to other domains, including vision transformers (ViTs) for image classification and object detection [5], vision-language alignment models for multimodal tasks [6], and diffusion models for generative applications like text-to-image synthesis [7]. The versatility of PEFT highlights its capacity to enable scalable, efficient model adaptation across diverse modalities and architectures, maintaining the performance of large models while mitigating the resource demands associated with full fine-tuning [3][8].

2. Background

2.1 Core Concepts of Large Language Models

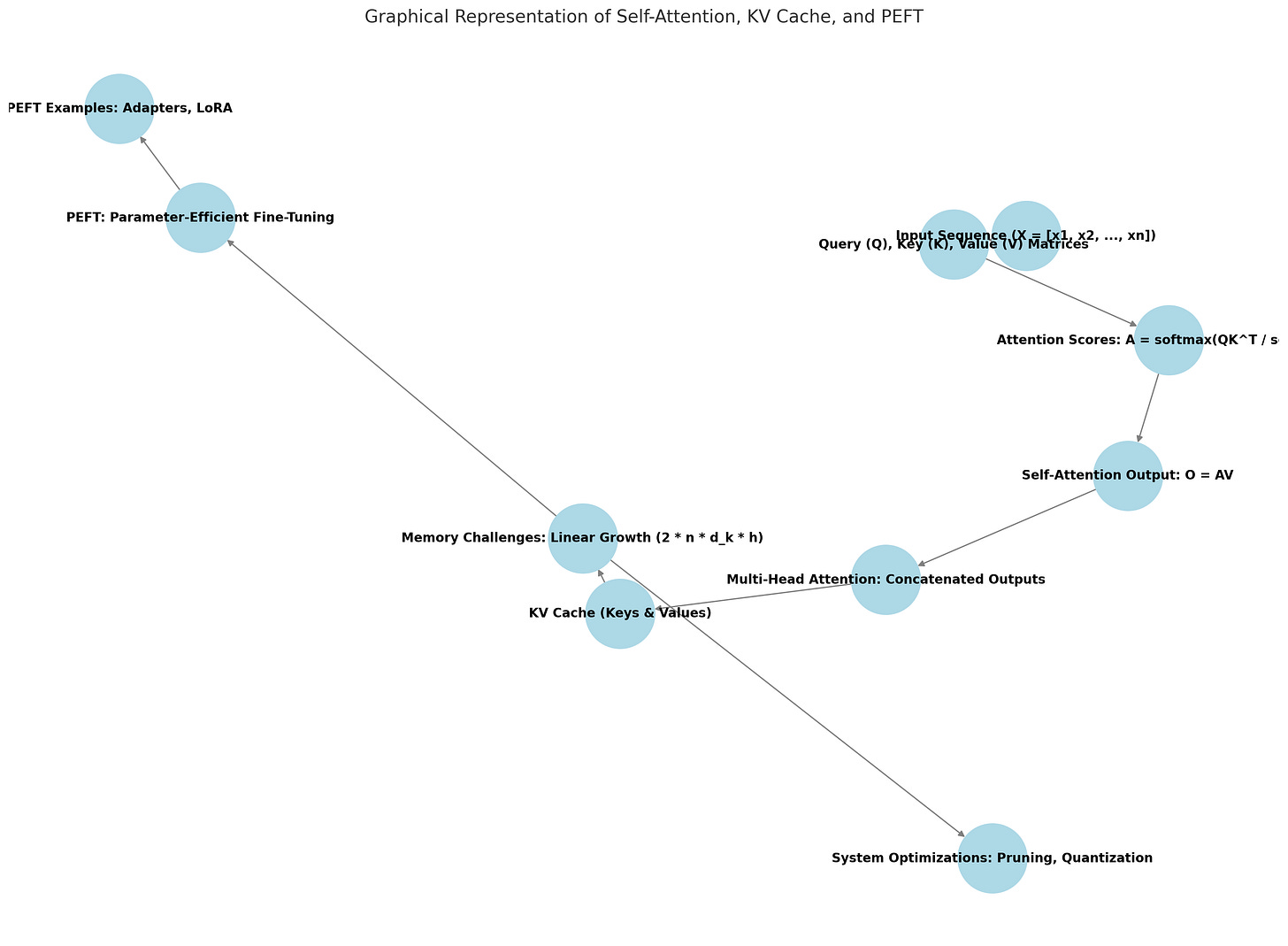

Large Language Models (LLMs), such as GPT-3 [1], LLaMA [9], and PaLM [12], are built on the Transformer architecture [10], which serves as the foundation for modern natural language processing systems. The Transformer employs self-attention mechanisms to efficiently model long-range dependencies within text sequences, making it particularly effective for pretraining on vast corpora of unlabeled text. This extensive pretraining enables LLMs to generalize across a diverse range of downstream tasks, demonstrating exceptional versatility and performance.

For autoregressive text generation, LLMs generate text by predicting the next token in a sequence based on the preceding context, processing input token by token. During this process, the model leverages key-value (KV) caching to store intermediate representations from previous decoding steps, reducing redundant computations and improving token prediction efficiency. However, the KV cache introduces significant memory challenges, particularly during large-scale inference in real-time applications or multi-tenant environments. As queries accumulate, the memory demands of storing KV caches can quickly exhaust available resources, creating scalability bottlenecks [25]. These challenges grow with the increasing size and complexity of LLMs, underscoring the need for innovative fine-tuning and deployment strategies that address both computational and memory constraints.

Core Concepts and Motivations for Parameter Efficiency

Large Language Models (LLMs), such as GPT-3 [1], LLaMA [9], and PaLM [12], have transformed natural language processing with their ability to generalize across diverse tasks. These models are built on the Transformer architecture [10], which employs self-attention mechanisms to model long-range dependencies within text sequences efficiently. Pretraining on vast corpora of unlabeled text allows LLMs to develop broad language representations, enabling exceptional performance in downstream tasks. For autoregressive text generation, LLMs predict the next token in a sequence based on preceding context, leveraging key-value (KV) caching to reduce redundant computations. However, while KV caching enhances efficiency, it also introduces significant memory challenges in large-scale deployments, particularly in real-time applications or multi-tenant environments [25]. The growing scale and complexity of LLMs exacerbate these challenges, necessitating innovative strategies for efficient fine-tuning and deployment.

The dramatic increase in LLM size—from GPT-2’s 1.5 billion parameters to GPT-3’s 175 billion parameters [1]—has made full fine-tuning computationally and economically impractical. Fine-tuning all model parameters not only demands access to massive GPU clusters but also risks overfitting, where the model performs well on task-specific data but struggles to generalize. Additionally, it can lead to catastrophic forgetting [170], where the model loses valuable pretrained knowledge. Full fine-tuning also requires significant memory resources for storing gradients during backpropagation, which is particularly problematic in multi-tenant or multi-model scenarios [25][132]. To overcome these limitations, Parameter-Efficient Fine-Tuning (PEFT) has emerged as a practical solution. By modifying only a small subset of parameters or introducing lightweight, task-specific modules, PEFT significantly reduces computational and memory overhead while preserving the model’s ability to generalize and retain pretrained knowledge. This approach ensures scalability and accessibility, making it possible to adapt large LLMs efficiently across diverse applications while addressing real-world constraints.

2.2 Overview of Parameter-Efficient Fine-Tuning (PEFT)

Parameter-Efficient Fine-Tuning (PEFT) offers a practical solution to the challenges of adapting large language models (LLMs) for specific tasks by freezing most of the pretrained backbone and introducing minimal, task-specific modifications. This approach preserves the model's general knowledge while enabling efficient customization for downstream tasks. By targeting only a small fraction of the model’s parameters or incorporating lightweight components, PEFT significantly reduces the computational and memory requirements associated with full fine-tuning while maintaining the model's expressive power.

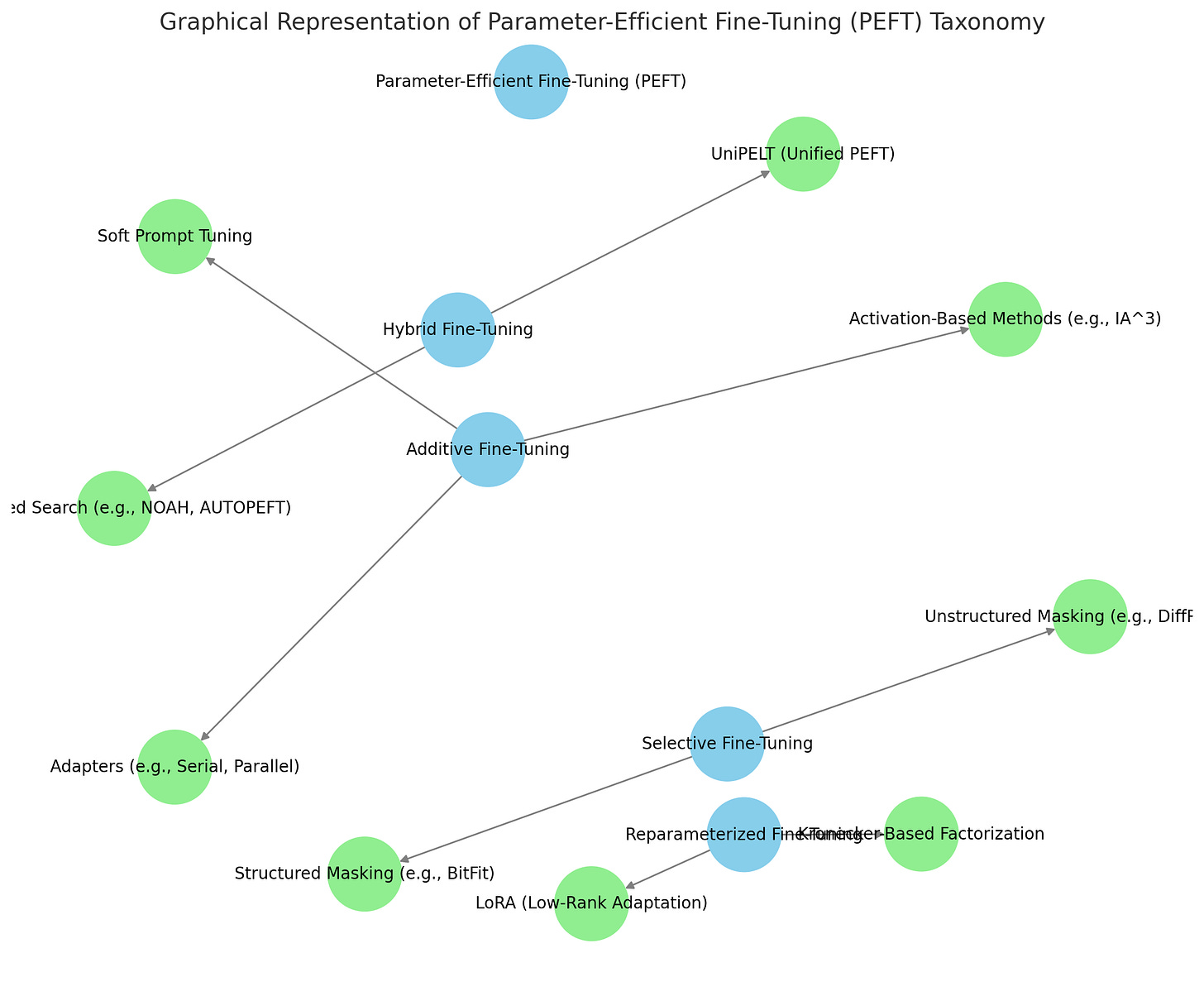

PEFT methods can be broadly categorized as follows:

Additive Modules: These methods introduce new trainable parameters into the model without altering the pretrained weights. For example, Adapters [31] are small neural networks inserted between layers of the Transformer, enabling task-specific adjustments. Similarly, Soft Prompts [41] prepend learnable embeddings to the input sequence, guiding the model’s pretrained knowledge toward specific tasks.

Selective Masking: This approach selectively fine-tunes a subset of the model's original parameters, leaving the rest frozen. BitFit [72], for instance, updates only the bias terms of the model, while other techniques employ learned masks [63] to dynamically identify and update critical parameters, reducing the overall training burden.

Reparameterized Low-Rank Factors: These methods reparameterize the model’s weight matrices into low-rank components, effectively reducing the number of trainable parameters while preserving the model's capacity. Low-Rank Adaptation (LoRA) [76] exemplifies this approach by injecting low-rank matrices into the model’s layers, offering a balance between efficiency and task-specific expressiveness.

Hybrid or Unified Approaches: Combining multiple PEFT strategies, these methods aim to optimize performance and flexibility. For instance, UniPELT [97] integrates techniques like LoRA, prompt tuning, and adapters into a unified framework, while AUTOPEFT [100] uses automated search to identify the best combination of PEFT strategies for a given task.

By leveraging these innovative techniques, PEFT enables scalable and resource-efficient adaptation of LLMs across diverse tasks and domains. This approach ensures that models retain their pretrained knowledge while addressing the practical constraints of memory, computation, and scalability, making PEFT a critical tool in modern machine learning workflows.

2.3 Downstream Tasks

Parameter-Efficient Fine-Tuning (PEFT) methods have demonstrated their effectiveness across a broad spectrum of downstream tasks, spanning multiple domains and modalities:

Natural Language Processing (NLP): PEFT has been extensively evaluated using standard benchmarks like GLUE [11] and SuperGLUE [25], which assess model performance on tasks such as sentiment analysis, question answering, and natural language inference. These benchmarks provide controlled environments to measure a model’s generalization and task-specific performance. Additionally, real-world datasets like ShareGPT [28] offer a more practical perspective, focusing on a model's ability to handle diverse and unpredictable user interactions in conversational contexts.

Vision Tasks: In computer vision, PEFT has been widely applied to Vision Transformers (ViTs), which excel in various image-related tasks. Common benchmarks include ImageNet, which evaluates image classification, MSCOCO [22] for object detection and segmentation, and ADE20K [23] for semantic segmentation. These benchmarks test the adaptability of PEFT methods in fine-tuning ViTs for specialized visual challenges, enabling scalable model deployment across vision applications.

Cross-Modal Tasks: For models that integrate text and vision modalities, PEFT has proven invaluable in adapting models to tasks such as visual question answering (VQA) [159], where a model answers questions based on an image, image captioning [155], which involves generating textual descriptions of images, and open-vocabulary classification [216][217], where models classify images into previously unseen categories. These tasks highlight PEFT’s capability to fine-tune large multimodal models efficiently while preserving their generalization across modalities.

Diffusion Models: In generative modeling, PEFT has been applied to diffusion models for text-to-image generation. These models are evaluated using datasets like GLIGEN [233] and ControlNet [240], which measure the quality, fidelity, and diversity of generated images conditioned on textual inputs. By reducing fine-tuning overhead, PEFT methods enhance the practical utility of diffusion models for creative and domain-specific generative tasks.

By enabling efficient adaptation to these diverse tasks, PEFT has consistently delivered state-of-the-art or near state-of-the-art performance with minimal resource requirements. This versatility underscores the transformative impact of PEFT techniques, allowing large models to scale effectively across a variety of domains while addressing the computational challenges of traditional fine-tuning approaches.

2.4 Evaluation Benchmarks for PEFT

Parameter-Efficient Fine-Tuning (PEFT) methods are evaluated on two critical dimensions: algorithmic performance and system-level efficiency. Together, these dimensions provide a holistic understanding of the effectiveness and practicality of PEFT implementations.

Algorithmic Benchmarks:

Algorithmic benchmarks focus on how well PEFT methods adapt large models to new tasks while minimizing computational overhead. Common metrics include:Task Accuracy: Measures the fine-tuned model's performance on the target task, such as sentiment analysis or image classification.

Parameter Count: Assesses the number of parameters modified or added during fine-tuning, reflecting the method's efficiency and lightweight nature.

Training Stability: Evaluates the consistency and reliability of the fine-tuning process, considering factors such as convergence speed and performance fluctuations.

Recent studies [25][26][27] have established these metrics as standard for comparing different PEFT methods, emphasizing their ability to balance efficiency and performance.

System-Level Benchmarks:

System-level benchmarks extend beyond task-specific performance to assess the scalability and efficiency of PEFT implementations in real-world scenarios. These include:Real-World Datasets:

ShareGPT [28]: Captures diverse user interactions with chat-based systems, providing a practical measure of how well a PEFT method handles dynamic query patterns in conversational AI.

Simulated Environments:

Azure Function Traces [29]: Mimic large-scale, serverless computing setups to evaluate throughput (requests processed per unit time) and latency (time to process a request), crucial metrics for assessing real-time performance in production environments.

Synthetic Workload Generators:

Gamma Process Simulations [30]: Use statistical distributions (e.g., Poisson or Gamma) to create artificial query patterns, testing a system's ability to manage concurrency, load balancing, and stability under varying user demand.

These benchmarks provide a comprehensive view of PEFT's strengths and limitations, from algorithmic design to practical deployment. By addressing both dimensions, researchers can ensure that PEFT methods are not only effective in theory but also robust, scalable, and ready for real-world applications. Such evaluations are essential for driving innovation in PEFT techniques and unlocking the full potential of large models while addressing the constraints of computational resources and deployment environments.

3. PEFT Taxonomy

3.1 Additive Fine-Tuning

Additive fine-tuning is a prominent category of Parameter-Efficient Fine-Tuning (PEFT) methods that enables large language models (LLMs) to adapt to downstream tasks by introducing new trainable parameters or modules. This approach keeps the pretrained backbone weights frozen, preserving the model's general knowledge while facilitating task-specific learning through the newly added parameters.

Adapters

Adapters are small, lightweight layers designed to capture task-specific information. They are strategically inserted into the Transformer architecture and function as modular components that allow efficient fine-tuning. Adapters can be configured in the following ways:

Serial Adapters: These adapters [31] are inserted sequentially after each sublayer within the Transformer. By creating a straightforward pipeline for task-specific learning, they enable efficient adaptation without altering the main architecture.

Parallel Adapters: In this configuration [32][33], adapters operate as side networks that process input in parallel with the main sublayers. The outputs from the adapters are then merged with the outputs of the primary layers, providing a more flexible integration of task-specific modifications.

Advanced Adapter Techniques for Multi-Task Learning

Adapters are particularly well-suited for multi-task learning scenarios where shared knowledge across tasks can improve overall performance. Advanced techniques build on the basic adapter architecture to enhance their utility:

AdapterFusion [35]: Combines multiple task-specific adapters into a unified framework, enabling the model to leverage knowledge from previously trained adapters.

AdaMix [36]: Introduces stochastic mixing of adapter layers to dynamically balance shared and task-specific knowledge.

MerA (Mergeable Adapters) [39]: Merges adapters trained on different tasks into a cohesive system, allowing efficient adaptation across multiple domains.

By employing these advanced techniques, adapters facilitate efficient task adaptation and knowledge sharing in multi-task environments, further extending the flexibility of additive fine-tuning.

Key Benefits of Additive Fine-Tuning

Additive fine-tuning preserves the pretrained model’s general knowledge, minimizes computational overhead, and ensures task-specific adaptability. The modular nature of adapters allows easy integration and reusability across tasks, making them a versatile and effective tool for fine-tuning large models. This approach highlights the broader utility of additive methods in enabling scalable and efficient adaptation of LLMs to a diverse range of applications.

Soft Prompt Tuning

Soft prompt tuning is a parameter-efficient fine-tuning technique that modifies a model's input sequence or hidden layers by adding learnable tokens, which are optimized during training while keeping the pretrained model's core parameters frozen. This approach ensures efficient task adaptation without compromising the model's general knowledge.

Key Variants of Soft Prompt Tuning

Prefix-Tuning [41]:

Inserts trainable prefixes into the input sequence.

Only the prefix tokens are optimized during training, leaving the backbone weights frozen.

Suitable for a wide range of tasks with minimal computational overhead.

P-Tuning [45]:

Extends prefix-tuning by introducing task-specific learnable tokens into intermediate layers.

Allows for deeper task-specific customization, improving adaptation to complex scenarios.

Prompt-Tuning [46]:

Focuses on optimizing input-level tokens to enhance generalization across tasks.

Particularly effective for few-shot learning scenarios, but can face challenges with stability during training.

Addressing Stability Challenges

Soft prompt tuning techniques, while simple and effective, can encounter stability issues during training, such as slow convergence or performance fluctuations [52][53]. To mitigate these issues, researchers have developed advanced strategies:

InfoPrompt [54]: Utilizes mutual information-based loss functions to encourage the learnable tokens to encode task-relevant information, leading to more stable and faster convergence.

DePT (Decomposition-based Prompt Tuning) [58]: Decomposes prompts into low-rank matrices and shorter token representations, improving both training stability and efficiency.

SPT (Selective Prompt Tuning) [50]: Introduces a gating mechanism to selectively apply prompt tokens at different layers, enhancing training robustness and overall performance.

Other Activation Modifiers

In addition to soft prompt tuning, other parameter-efficient methods modify intermediate activations within the Transformer architecture to enable efficient fine-tuning:

(IA)^3 (Intermediate Activation Adjustments) [59]: Scales intermediate activations in the Transformer with learnable vectors, achieving strong performance while updating minimal parameters.

SSF (Scaling and Shifting Features) [61]: Adjusts intermediate activations by applying scaling and shifting transformations. After training, these modifications can be merged into the frozen backbone, minimizing inference overhead.

Advantages and Applications

Soft prompt tuning and activation modification techniques provide a balance between task performance, computational efficiency, and training stability. By leveraging learnable tokens or activation adjustments, these methods enable efficient adaptation of large language models (LLMs) to diverse downstream tasks, maintaining high performance while reducing the resource demands typically associated with full fine-tuning.

3.2 Selective Fine-Tuning

Selective fine-tuning is a Parameter-Efficient Fine-Tuning (PEFT) technique that focuses on adapting large pretrained models to downstream tasks by updating only a carefully chosen subset of their parameters. This targeted approach reduces computational and memory demands while enabling effective task-specific customization. Selective fine-tuning methods are broadly classified into unstructured masking and structured masking.

Unstructured Masking

Unstructured masking applies sparse masks to the model's parameters, identifying and updating only the most influential ones for a given task. This approach independently evaluates each parameter’s importance, freezing those deemed less critical.

DiffPruning [63]: Dynamically prunes parameters during training based on their importance scores, retaining only the most impactful ones for task adaptation.

FishMask [66] and SAM (Second-order Approximation Method) [69]: These methods use advanced techniques, such as Fisher information or second-order gradient approximations, to dynamically learn masks that identify key parameters for fine-tuning. They are particularly effective in resource-constrained settings, where computational efficiency is critical.

Structured Masking

Structured masking focuses on updating entire groups or modules of parameters, aligning better with hardware architectures for optimized training and inference.

BitFit [72]: Fine-tunes only the bias terms of the model, which constitute less than 0.1% of the total parameters in BERT-based models. Despite its simplicity, BitFit achieves performance comparable to full fine-tuning in many scenarios, showcasing the importance of bias terms in model adaptation.

Xattn Tuning [73]: Selectively fine-tunes cross-attention layers in the model. By focusing on these layers, Xattn Tuning ensures hardware-friendly operations while maintaining high efficiency.

Advantages and Applications

Selective fine-tuning provides a practical alternative to full fine-tuning by strategically focusing on the most relevant parameters. Unstructured masking offers flexibility and efficiency, particularly in low-resource environments, while structured masking enhances hardware compatibility and simplifies training processes. By reducing computational and memory requirements without significantly compromising task performance, selective fine-tuning has emerged as a valuable tool for adapting large pretrained models to diverse downstream tasks efficiently.

3.3 Re-parameterized Fine-Tuning

Re-parameterized fine-tuning focuses on factorizing large weight matrices or embedding them into low-rank spaces, significantly reducing the number of trainable parameters.

LoRA and Variants

Low-Rank Adaptation (LoRA) [76] decomposes weight matrices into low-rank components, allowing a small change (ΔW\Delta WΔW) to be added to the original weights. LoRA has inspired several enhancements:

Dynamic Rank Selection [82]–[84]: These methods adapt the rank dynamically during training, ensuring optimal parameter usage.

Gated LoRA [84]: Introduces gating mechanisms to control the contribution of low-rank factors, enhancing task-specific adaptability.

Magnitude and Direction Decomposition [81]: Separates weight updates into magnitude and direction, providing finer control over parameter adjustments.

Advanced Factorizations

Beyond LoRA, other methods leverage advanced decompositions to optimize parameter usage:

Compacter [77]: Utilizes parameter-efficient Kronecker-product expansions, reducing the dimensionality of the updates.

KronA [78]: Extends Kronecker-based approaches to capture more complex transformations with fewer parameters.

These reparameterized methods ensure that large-scale models can be fine-tuned efficiently while maintaining high performance on downstream tasks.

3.4 Hybrid Fine-Tuning

Hybrid fine-tuning combines multiple Parameter-Efficient Fine-Tuning (PEFT) strategies into a unified approach, leveraging the strengths of individual methods to optimize the adaptation of large models for diverse downstream tasks. By employing automated or heuristic techniques, hybrid fine-tuning identifies the most effective combinations of PEFT methods, maximizing performance and efficiency.

Unified Approaches

Unified methods integrate different PEFT techniques within a single framework, providing flexibility and adaptability for task-specific fine-tuning:

UniPELT [97]: Combines LoRA (Low-Rank Adaptation), prefix-tuning, and adapters, strategically distributing these techniques across different layers of the model to achieve optimal task performance.

MAM Adapter [10]: Incorporates a similar multi-technique approach, combining adapters, prefix-tuning, and LoRA within a cohesive architecture to enhance modularity and efficiency.

LLM-Adapters [101]: Focuses on merging various adapter types to meet the specific and diverse requirements of large language models, emphasizing modularity and adaptability.

NAS-Based Optimization

Neural Architecture Search (NAS) and automated configuration exploration play a critical role in hybrid fine-tuning, enabling systematic identification of the best configurations for a given task:

NOAH [99]: Utilizes NAS to explore and optimize combinations of adapters, LoRA, and prompt-tuning configurations, systematically identifying the most effective setup for each task.

AUTOPEFT [100]: Employs high-dimensional Bayesian optimization to efficiently search the vast configuration space of PEFT techniques. This automated process ensures both effective and resource-efficient fine-tuning, tailored to the specific requirements of the task.

Advantages and Applications

Hybrid fine-tuning offers a comprehensive and intelligent approach to adapting large models. By leveraging the complementary strengths of multiple PEFT strategies and optimizing their combination, hybrid fine-tuning delivers superior performance and efficiency across diverse applications. This method provides greater flexibility, modularity, and adaptability, enabling large models to meet the varying demands of complex downstream tasks. As an advanced paradigm in PEFT, hybrid fine-tuning represents a significant step forward in fine-tuning large language models for practical, scalable, and efficient use.

4.1 KV-Cache Management for PEFT Efficiency

In auto-regressive decoding, large language models (LLMs) utilize key-value (KV) caches to store activations from previous decoding steps, reducing redundant computations and improving inference efficiency. However, as sequence lengths grow and additional adapter layers are introduced, these KV caches can expand substantially, leading to significant memory challenges, particularly in multi-adapter setups [25].

S-LoRA [140] addresses this issue by implementing a unified paging mechanism that segments KV-cache blocks associated with each adapter. This mechanism organizes memory into smaller, reusable blocks, minimizing fragmentation and optimizing cache usage during large-scale multi-adapter serving. By dynamically allocating and managing memory, S-LoRA ensures efficient utilization of hardware resources, enabling smoother and more reliable real-time inference, even under heavy workloads.

This approach not only mitigates memory constraints but also enhances the scalability of multi-adapter systems, making it a practical solution for deploying PEFT methods in resource-intensive applications.

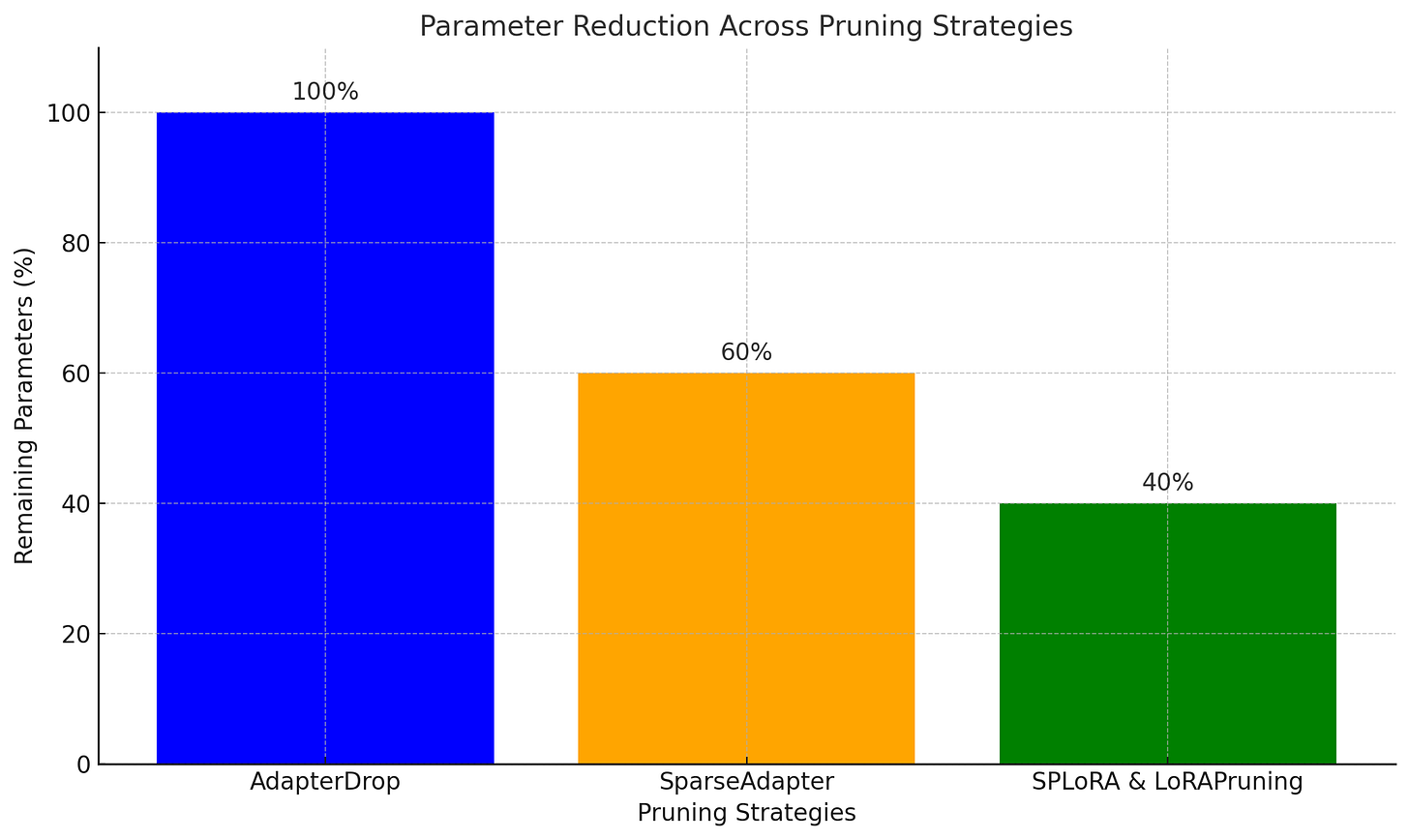

4.2 Pruning Strategies for PEFT

Pruning strategies in Parameter-Efficient Fine-Tuning (PEFT) aim to reduce the parameter count and computational overhead of fine-tuning modules by eliminating redundant or less important components. These techniques are particularly effective in speeding up inference while maintaining task-specific performance, making them invaluable for resource-constrained settings.

Key Pruning Techniques

AdapterDrop [117]:

Targets adapter modules, selectively skipping those in the lower layers of the model.

Lower-layer adapters often contribute minimally to task-specific performance, allowing AdapterDrop to focus computational resources on upper layers.

This technique achieves faster inference without significantly degrading accuracy, making it a simple yet effective pruning strategy.

SparseAdapter [118]:

Introduces sparsity within the adapter parameters, meaning a high proportion of adapter weights are zeroed out.

Balances the representational power of the adapter modules with computational efficiency, reducing the memory and processing demands of the model.

Particularly suitable for scenarios where maintaining both capacity and efficiency is critical.

SPLoRA and LoRAPruning [119][120]:

Extend pruning techniques to LoRA (Low-Rank Adaptation) modules by targeting both backbone and LoRA parameters.

These methods identify and remove low-importance parameters within the LoRA channels, reducing the parameter count while preserving the effectiveness of LoRA.

Ideal for resource-constrained environments where reducing memory and computational requirements is essential.

Advantages and Applications

Pruning strategies in PEFT provide a practical pathway to optimize the efficiency of large language models by strategically reducing redundancy in fine-tuning modules. These methods improve inference speed, lower memory usage, and maintain task-specific performance, making them highly effective for real-world deployment in resource-limited settings. By tailoring computational focus to the most impactful parameters, pruning ensures that PEFT methods remain both scalable and accessible.

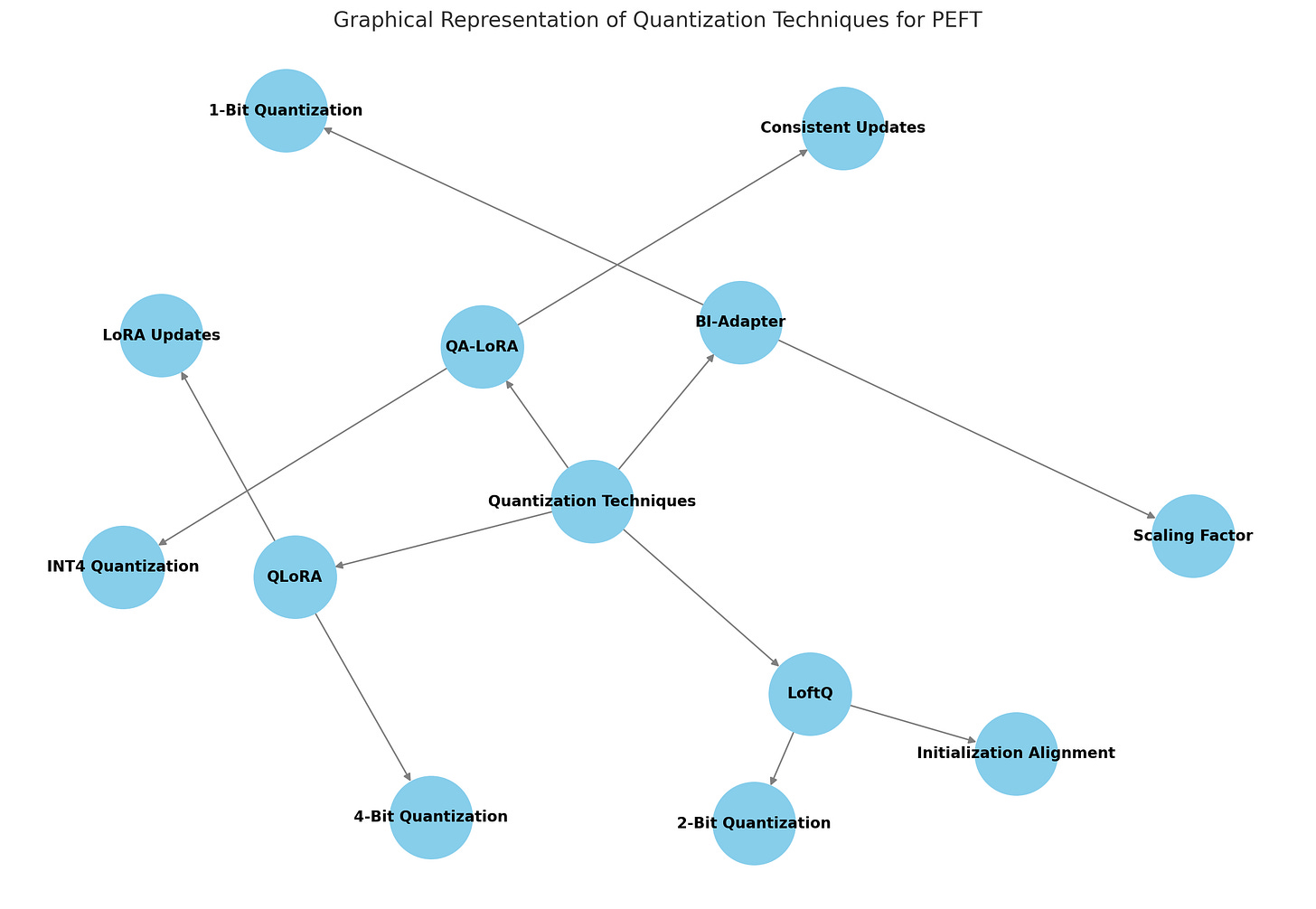

4.3 Quantization Strategies for PEFT

Quantization is a powerful technique for reducing the memory footprint and computational demands of Parameter-Efficient Fine-Tuning (PEFT) by representing model weights and activations in lower-precision numerical formats, such as 4-bit or even 1-bit integers. This approach significantly decreases storage and computational requirements while maintaining task-specific performance, making it ideal for resource-constrained environments.

Notable Quantization Strategies

BI-Adapter [122]:

Demonstrates that adapter modules are resilient to extreme quantization, achieving compression down to 1-bit precision while maintaining competitive performance.

This highlights the potential for significant memory and computational savings with adapters, enabling the use of large models in low-resource scenarios without substantial performance degradation.

QLoRA [124]:

A groundbreaking approach that combines 4-bit quantization with LoRA.

Allows backpropagation through a 4-bit quantized backbone while incorporating LoRA updates.

Efficiently merges LoRA modifications with the quantized backbone weights, providing a scalable and memory-efficient solution for fine-tuning large models in low-memory environments.

LoftQ and QA-LoRA [125][127]:

LoftQ: Addresses initialization mismatches by optimizing the alignment of LoRA with ultra-low-bit quantization (e.g., 2-bit precision). This ensures smooth integration and effective fine-tuning under extreme quantization.

QA-LoRA: Ensures consistency between the quantized pretrained backbone and LoRA updates by keeping both in a unified low-precision format, such as INT4. This alignment enhances the stability and effectiveness of quantized fine-tuning.

4.3.1. Advantages and Applications

Quantization strategies in PEFT enable significant reductions in memory and computational requirements while retaining model performance. Techniques like BI-Adapter, QLoRA, LoftQ, and QA-LoRA address challenges such as initialization mismatches and low-precision consistency, paving the way for scalable and efficient fine-tuning. These methods make it possible to deploy large language models in resource-constrained environments, extending their accessibility and practicality across a wide range of applications. By combining quantization with PEFT, researchers and practitioners can unlock the potential of large models while minimizing infrastructure costs.

4.3.2. Quantization Strategies for Parameter-Efficient Fine-Tuning (PEFT): An Overview-

Quantization is a technique that reduces the memory footprint and computational demands of large language models by using lower-precision numerical formats. This is particularly important for deploying models in resource-constrained environments. Several strategies have been developed to achieve this efficiently while maintaining performance.

Notable Quantization Strategies:

BI-Adapter:

Resilience to Extreme Quantization: BI-Adapter demonstrates robustness to extreme quantization, even down to 1-bit precision, while maintaining competitive performance.

Potential Mechanisms: Likely employs smart design choices to preserve critical information and ensure performance is not significantly degraded.

QLoRA:

Combination of 4-bit Quantization and LoRA: QLoRA integrates 4-bit quantization with LoRA, allowing backpropagation through a quantized backbone.

Handling Gradients: Careful management of gradients in lower precision is essential to maintain training effectiveness.

LoftQ:

Addressing Initialization Mismatches: LoftQ optimizes the alignment of LoRA with ultra-low-bit quantization (e.g., 2-bit precision), ensuring stability and effectiveness in fine-tuning.

QA-LoRA:

Consistency in Low-Precision Formats: Maintains consistency between the quantized pre-trained backbone and LoRA updates, using a unified low-precision format like INT4.

Advantages and Applications:

Resource Efficiency: Significant reductions in memory and computational requirements, enabling deployment in low-resource environments.

Accessibility: Extends the practicality of large models across various applications, minimizing infrastructure costs.

Performance Maintenance: Techniques maintain performance despite lower precision, making them viable for real-world use cases.

Considerations and Future Directions:

Implementation Challenges: Ease of integration into existing pipelines and support from frameworks.

Performance Trade-offs: Potential scenarios where performance drops are more pronounced.

Interaction with Other PEFT Methods: Synergistic effects or conflicts when combining quantization with other techniques.

Training and Inference Performance: Impact on training time and hardware-specific inference efficiency.

Generalization and Robustness: Effects of quantization noise on model adaptability and robustness.

To effectively address the integration of quantization strategies with parameter-efficient fine-tuning (PEFT) for large language models, one must first understand the role of quantization in reducing memory and computational demands. Quantization involves mapping higher-precision values to lower-precision formats, typically through scaling and rounding, which is crucial for deploying large models in resource-constrained environments. PEFT methods, such as LoRA, allow for efficient fine-tuning by introducing low-rank updates to the model weights, preserving the pre-trained knowledge while adapting to specific tasks. When combining quantization with PEFT, strategies like BI-Adapter and QLoRA play significant roles. BI-Adapter demonstrates resilience to extreme quantization, maintaining performance even at 1-bit precision, while QLoRA integrates 4-bit quantization with LoRA, enabling efficient fine-tuning. The mathematical formulation involves quantizing the backbone weights and then adding low-rank updates, ensuring compatibility and minimizing quantization error. Challenges in this integration include dealing with non-differentiable quantization functions and balancing precision between the quantized backbone and low-rank updates. Potential solutions involve quantization-aware training techniques and joint optimization frameworks that consider both quantization and low-rank updates, aiming to maintain performance while reducing resource requirements.

4.3.3. Mathematical Modeling of Quantization Strategies for Parameter-Efficient Fine-Tuning (PEFT) in Large Language Models

1. Introduction to Quantization and PEFT:

Quantization: Reduces the precision of model weights to lower memory and computational demands.

PEFT (Parameter-Efficient Fine-Tuning): Techniques like LoRA (Low-Rank Adaptation) introduce low-rank matrices to adapt models efficiently.

2. Combining Quantization with LoRA:

Quantization Process:

Scale weights by a factor ss, round to lower precision, and store as integers.

Dequantize by multiplying back by ss.

LoRA Formulation:

Weight matrix W=Wbase+UVTW=Wbase+UVT, where UU and VV are low-rank matrices.

Quantization of WbaseWbase:

Quantize WbaseWbase to lower precision while ensuring compatibility with UU and VV.

3. Specific Strategies:

QLoRA:

Quantizes the backbone to 4 bits while keeping LoRA adapters in higher precision (e.g., 16-bit).

Involves careful handling of gradients during backpropagation.

BI-Adapter:

Demonstrates resilience to extreme quantization (e.g., 1-bit) while maintaining performance.

4. Mathematical Considerations:

Error Propagation:

Model quantization error and its impact on performance.

Techniques to minimize error, such as sophisticated quantization schemes.

Joint Optimization:

Explore optimizing quantization parameters and low-rank updates together.

5. Training and Evaluation:

Quantization-Aware Training:

Train models with awareness of subsequent quantization to improve performance.

Evaluation Metrics:

Accuracy, memory usage, computational speed, and energy efficiency.

6. Practical Considerations:

Initialization:

Specific techniques for initializing low-rank matrices in a quantized setting.

Layer-Specific Quantization:

Differentiate between attention layers and feedforward layers for optimized quantization.

7. Research and Implementation:

Existing Research:

Review studies and benchmarks for insights into quantized PEFT models.

Open-Source Implementations:

Experiment with available tools to gain hands-on understanding.

The integration of quantization with PEFT is a complex yet promising area that offers significant potential for deploying large language models efficiently. By understanding the mathematical interactions and practical implications, researchers and practitioners can develop more efficient and effective models for various applications.

4.4 Memory-Efficient Training

Memory-efficient training techniques in Parameter-Efficient Fine-Tuning (PEFT) are designed to address the significant memory challenges associated with training large language models (LLMs). By reducing the storage requirements for gradients and activations, these methods enable PEFT to scale effectively to larger models while keeping memory consumption manageable.

Key Memory-Efficient Training Methods

Side-Tuning and LST (Ladder-Side Tuning) [131][132]:

Introduce a small, learnable side branch or ladder-like structure parallel to the main model backbone.

During backpropagation, gradients are routed through the lightweight side branch instead of the entire backbone network, significantly reducing memory usage.

These methods are particularly effective for minimizing gradient storage during training while maintaining task-specific adaptability.

MEFT (Memory-Efficient Fine-Tuning) [134]:

Transforms the model into a reversible architecture, eliminating the need to store forward activations during training.

Forward activations can be recomputed during backpropagation from the final output, resulting in substantial memory savings.

This approach is especially beneficial for training very large-scale models where activation storage is a bottleneck.

LoRA-FA (LoRA with Frozen Activations) [135]:

Reduces memory overhead in LoRA fine-tuning by freezing one of the projection matrices (either WupW_\text{up}Wup or WdownW_\text{down}Wdown).

Freezing one matrix eliminates the need to store full input activations for gradient calculations, significantly lowering memory requirements during training.

Particularly suited for tasks involving high-dimensional inputs where memory usage is a critical concern.

MeZO (Memory-Efficient Zeroth-Order) [138]:

A gradient-free, zeroth-order optimization approach for PEFT.

Instead of relying on backpropagation, MeZO uses forward passes combined with zeroth-order gradient estimators to fine-tune LoRA modules.

By completely bypassing the need for gradient calculations and storage, MeZO drastically reduces memory usage, enabling efficient fine-tuning even in highly constrained environments.

Advantages and Applications

Memory-efficient training techniques provide critical solutions for scaling PEFT to larger models and more complex tasks. By addressing memory bottlenecks during training, methods such as Side-Tuning, MEFT, LoRA-FA, and MeZO reduce computational overhead while maintaining high task performance. These innovations make it possible to fine-tune large-scale LLMs efficiently, paving the way for broader adoption in memory-constrained and resource-limited scenarios. These strategies underscore the ongoing efforts to make PEFT scalable, practical, and accessible for real-world applications.

4.5. Summary of Efficiency Strategies

4.5.1. Efficient PEFT Design: Innovations for Resource Efficiency

Parameter-Efficient Fine-Tuning (PEFT) has emerged as a critical approach for adapting large language models (LLMs) to specific downstream tasks while minimizing computational and memory overheads. This efficiency makes PEFT particularly attractive for deploying LLMs in resource-constrained environments. Key innovations in PEFT, including KV-cache management, pruning, quantization, and memory-efficient training techniques, collectively ensure that these methods remain practical for large-scale deployments without sacrificing the performance gains offered by large models.

KV-Cache Management

KV-cache management optimizes the handling of key-value (KV) pairs stored during decoding to avoid redundant computations. However, as sequence lengths and adapter layers increase, KV caches pose significant memory challenges, particularly in multi-adapter setups. S-LoRA addresses this issue with a unified paging mechanism that segments KV-cache blocks associated with each adapter. This strategy minimizes fragmentation, optimizes cache usage, and ensures smooth real-time inference, even under heavy workloads.

Pruning

Pruning reduces the parameter count and computational overhead by selectively removing redundant or less important components from the model, effectively speeding up inference without a significant drop in accuracy.

AdapterDrop: Skips adapters in the lower layers of the model, where their contribution to task-specific performance is minimal, reallocating resources to upper layers for better efficiency.

SparseAdapter: Introduces sparsity within adapter parameters, balancing representational power and computational efficiency.

SPLoRA and LoRAPruning: Extend pruning to LoRA modules by targeting low-importance parameters in both the backbone and LoRA channels, reducing parameter counts while preserving model effectiveness.

Quantization

Quantization significantly reduces the memory footprint and computational demands by representing model weights and activations in lower-precision numerical formats.

BI-Adapter: Demonstrates the resilience of adapter modules to extreme quantization, achieving compression down to 1-bit precision while maintaining performance.

QLoRA: Combines 4-bit quantization with LoRA, enabling backpropagation through a quantized backbone while incorporating LoRA updates for efficient fine-tuning.

LoftQ and QA-LoRA: Tackle the complexities of merging quantized weights with LoRA updates. LoftQ optimizes initialization for ultra-low-bit quantization (e.g., 2-bit), while QA-LoRA ensures consistent low-precision formats (e.g., INT4) for both the pretrained backbone and LoRA updates.

Memory-Efficient Training

Memory-efficient training techniques reduce the storage of gradients and activations during training, addressing the challenges posed by large models:

Side-Tuning and Ladder-Side Tuning (LST): Introduce lightweight side branches or ladder structures to redirect gradient computations, significantly reducing memory usage.

MEFT (Memory-Efficient Fine-Tuning): Transforms the model into a reversible architecture, eliminating the need to store forward activations during training, resulting in substantial memory savings.

LoRA-FA: Freezes one of the projection matrices in LoRA, reducing activation storage requirements during training, particularly for tasks with high-dimensional inputs.

MeZO: A gradient-free, zeroth-order optimization approach that relies solely on forward passes to fine-tune LoRA modules. By bypassing gradient storage and propagation, MeZO drastically cuts memory usage, enabling efficient fine-tuning in memory-constrained environments.

These innovations collectively highlight the ongoing efforts to make PEFT more resource-efficient and scalable. From optimizing KV-cache management for real-time serving to leveraging pruning, quantization, and memory-efficient training techniques, PEFT enables practical and effective adaptation of large language models in various real-world scenarios, particularly in resource-limited settings. These advancements ensure that PEFT continues to unlock the potential of large models while minimizing infrastructure costs, making it a vital tool for modern AI deployment.

5.1 PEFT for Large Language Models (Beyond Basics)

Parameter-Efficient Fine-Tuning (PEFT) methods have demonstrated remarkable versatility in adapting large language models (LLMs) for specialized tasks across diverse domains. By fine-tuning lightweight modules or adapters, PEFT enables efficient task adaptation without altering the core pretrained model. Below are some notable applications showcasing the adaptability and computational efficiency of PEFT techniques.

5.1.1 Visual Instruction Following

PEFT methods have been extended to multi-modal tasks that integrate textual and visual inputs, enabling LLMs to process and respond to image-based instructions. Frameworks such as LLaMA-Adapter [164] and LLaVA [154] exemplify this adaptation by coupling a visual encoder with a pretrained LLM. These frameworks introduce lightweight adapters or prompts to handle multi-modal instruction following, allowing the LLM to interpret visual inputs alongside textual queries. By fine-tuning only the additional components, these approaches achieve high adaptability and performance at a reduced computational cost, without modifying the pretrained backbone.

5.1.2 Continual Learning

Continual learning addresses the challenge of training models to learn new tasks sequentially without losing knowledge from prior tasks, a problem known as catastrophic forgetting. PEFT methods like AdapterCL [171] and CPT [172] tackle this issue by maintaining separate adapters or prompts for each task. This modular structure ensures that task-specific knowledge is preserved while new tasks are added seamlessly. Additionally, O-LoRA [175] introduces orthogonal subspaces in multi-task LoRA modules, minimizing task interference. This innovation enables models to maintain high performance across multiple domains, even in scenarios requiring sequential task adaptation.

5.1.3 Context Window Extension

Handling long-range dependencies is critical for tasks such as summarizing lengthy documents or processing extended conversations. PEFT techniques have been applied to extend the context length of LLMs:

LongLoRA [177]: Incorporates partial LoRA updates and a specialized attention mechanism to expand LLaMA’s context length to 8,000 tokens, enabling the processing of long textual sequences efficiently.

LLoCO [179]: Offers a complementary approach by compressing user documents offline and integrating this compressed data with LoRA modules. This method allows the model to manage extended context scenarios effectively, reducing computational strain while retaining performance.

Key Insights

These applications illustrate the broad applicability and effectiveness of PEFT in adapting LLMs for specialized tasks. From enabling multi-modal instruction following to facilitating continual learning and extending context windows, PEFT techniques demonstrate their ability to enhance model functionality without the computational and memory overhead of full fine-tuning. These innovations enable LLMs to address complex, real-world challenges in a scalable and resource-efficient manner.

5.2 PEFT for Vision Transformers

The increasing scale and complexity of Vision Transformers (ViTs) [184]–[188] have made Parameter-Efficient Fine-Tuning (PEFT) methods indispensable for adapting these powerful models to specific tasks without the computational burden of full fine-tuning. By focusing on lightweight and modular adaptations, PEFT techniques allow ViTs to retain their pretrained generalization capabilities while enabling efficient task-specific customization.

Prominent PEFT Methods for Vision Transformers

AdaptFormer [194]:

Introduces lightweight, adaptable modules into the feed-forward layers of ViTs.

These modules can operate in parallel with the original layers or seamlessly integrate into them.

This approach enables efficient task-specific adaptation without altering the pretrained backbone, preserving the model’s ability to generalize across diverse tasks.

AdaptFormer provides flexibility in fine-tuning while maintaining computational efficiency, making it ideal for large-scale vision tasks.

Visual Prompt Tuning (VPT) [193]:

Inserts learnable “visual prompts” into the input patch embeddings of ViTs.

These prompts act as additional trainable parameters that guide the model toward task-specific objectives.

A key advantage of VPT is its non-intrusive design—it does not require modifications to the core architecture of the ViT.

This makes VPT a highly efficient method for fine-tuning, balancing task performance with minimal computational overhead.

Key Contributions and Applications

PEFT methods such as AdaptFormer and VPT highlight the successful adaptation of parameter-efficient techniques, originally developed for natural language processing, to the domain of computer vision. By enabling efficient fine-tuning of large ViTs, these approaches support a wide range of vision tasks, from image classification to object detection and beyond, while addressing challenges related to computational resources and model complexity.

These innovations underscore the importance of PEFT in making ViTs more accessible and scalable, paving the way for further advancements in computer vision applications

5.3 PEFT for Vision–Language Alignment Models

Vision–language alignment models (VLAs), such as CLIP [199] and ALIGN [200], have significantly advanced tasks like open-vocabulary classification and image-text retrieval by aligning visual and textual representations. Parameter-Efficient Fine-Tuning (PEFT) techniques have further enhanced these models, reducing the computational cost of task-specific adaptation and enabling efficient fine-tuning for specific tasks without extensive computational resources.

Prominent PEFT Techniques for VLAs

CoOp and CoCoOp [216][217]:

Replace handcrafted text prompts with learnable vectors, allowing for more dynamic and flexible alignment between visual and language representations.

These learnable prompts adapt quickly to new tasks, making them robust alternatives to traditional manual prompt engineering.

CoCoOp extends CoOp by introducing a mechanism to handle task variations, improving adaptability in diverse application scenarios.

CLIP-Adapter and Tip-Adapter [222][223]:

Integrate small residual adapters into the pretrained CLIP model to refine its representations for zero-shot and few-shot classification tasks.

These lightweight adapters improve performance with minimal parameter updates, offering a highly efficient approach to task-specific fine-tuning.

CLIP-Adapter focuses on improving alignment for downstream tasks, while Tip-Adapter further enhances this by incorporating additional supervision from retrieval-based prompts for better task adaptation.

Key Contributions and Applications

PEFT techniques like CoOp, CoCoOp, CLIP-Adapter, and Tip-Adapter exemplify the adaptation of parameter-efficient strategies for VLAs. These methods enable the efficient fine-tuning of vision–language models, supporting diverse applications such as open-vocabulary classification, image-text retrieval, and zero-shot learning. By leveraging lightweight and modular adjustments, PEFT reduces the computational burden while maintaining high performance, making VLAs more accessible and practical for a broad range of multi-modal tasks.

These advancements underscore the potential of PEFT in bridging vision and language modalities, furthering progress in multi-modal learning while addressing the challenges of resource efficiency and model complexity.

5.4 PEFT for Diffusion Models

Diffusion models, widely recognized for their capabilities in generative tasks such as text-to-image synthesis [226]–[228] and stable diffusion [233], have significantly benefited from Parameter-Efficient Fine-Tuning (PEFT) strategies. These methods enable targeted adaptations for specific conditions or tasks while maintaining computational efficiency and preserving the integrity of the pretrained model.

Key Applications of PEFT in Diffusion Models

ControlNet [240]:

Introduces trainable side networks appended to the main diffusion model.

These networks incorporate condition signals such as edge maps, depth information, or keypoints, enabling the model to generate highly specific outputs tailored to these inputs.

By training only the side networks and keeping the main model’s weights frozen, ControlNet achieves targeted adaptations without compromising the pretrained model’s generalization abilities.

Textual Inversion and Custom Diffusion [243][244]:

Textual Inversion: Focuses on introducing new pseudo-words in the embedding space to represent specific visual concepts. This method freezes the main model and trains only small modules, allowing the diffusion model to learn novel concepts efficiently.

Custom Diffusion: Trains partial cross-attention layers, enabling the model to adapt to new concepts provided by a small set of user images. Like Textual Inversion, this approach keeps the main model weights unchanged, ensuring computational efficiency and minimizing parameter updates.

IP-Adapter [245]:

Adds a dedicated cross-attention module specifically for processing image inputs in text-to-image generation tasks.

By fine-tuning only this additional module, the model integrates image information efficiently, extending its capabilities to multi-modal generative tasks.

This targeted fine-tuning approach enhances the model's versatility with minimal computational overhead.

Advantages and Applications

PEFT methods in diffusion models exemplify how parameter-efficient strategies can optimize powerful generative models for diverse tasks. Techniques like side networks, pseudo-words, and cross-attention modules demonstrate the adaptability of diffusion models to specialized applications, such as fine-grained conditional generation and concept learning, without the computational cost of full fine-tuning.

These advancements underscore the potential of PEFT in enabling efficient customization of diffusion models for real-world applications, from creative tasks like digital art generation to technical applications in 3D modeling and design. By leveraging these innovations, diffusion models can continue to expand their impact across a wide range of domains while maintaining scalability and efficiency.

5.5. Summary of Applications

The extension of Parameter-Efficient Fine-Tuning (PEFT) techniques beyond traditional natural language processing (NLP) tasks underscores their versatility and scalability in adapting large-scale models across diverse domains. Whether applied to multi-modal large language models (LLMs), vision transformers (ViTs), vision–language alignment models (VLAs), or diffusion models, PEFT methods consistently achieve impressive efficiency and adaptability. By focusing on updating only a small fraction of parameters or introducing lightweight components, these techniques ensure scalability, practicality, and cost-effectiveness for a wide range of applications.

Applications Across Domains

Multimodal Large Language Models (LLMs):

PEFT has been successfully extended to multi-modal tasks requiring the integration of visual and textual inputs.

Frameworks like LLaMA-Adapter and LLaVA combine a visual encoder with a pretrained LLM, introducing lightweight adapters or prompts to handle multi-modal instruction following.

By fine-tuning only the added components, these frameworks enable LLMs to interpret and respond to image-based instructions efficiently, preserving the integrity of the pretrained backbone and achieving high adaptability with low computational cost.

Vision Transformers (ViTs):

The increasing scale and complexity of ViTs have made PEFT indispensable for efficient task-specific adaptation.

AdaptFormer incorporates lightweight modules into the feed-forward layers of ViTs, enabling efficient fine-tuning while retaining the pretrained model’s generalization capabilities.

Visual Prompt Tuning (VPT) introduces learnable "visual prompts" into the input patch embeddings, serving as additional trainable parameters that guide the model toward specific tasks without altering its core architecture.

Vision–Language Alignment Models (VLAs):

VLAs like CLIP and ALIGN have advanced significantly with PEFT methods, enhancing tasks like open-vocabulary classification and image-text retrieval.

CoOp and CoCoOp replace handcrafted text prompts with learnable vectors, offering more dynamic and flexible alignment between visual and language representations.

CLIP-Adapter and Tip-Adapter add small residual adapters to the pretrained CLIP model, refining representations for zero-shot and few-shot classification tasks with minimal parameter updates.

Diffusion Models:

Diffusion models, widely used in generative tasks like text-to-image synthesis and stable diffusion, have also benefited from PEFT techniques.

ControlNet appends trainable side networks to the main model to incorporate condition signals such as edge maps, depth data, or keypoints, enabling highly targeted adaptations.

Textual Inversion and Custom Diffusion freeze the main model weights and train smaller modules, such as pseudo-words or partial cross-attention layers, for learning specific visual concepts.

IP-Adapter introduces a dedicated cross-attention module to process image inputs efficiently for text-to-image generation tasks, enhancing model versatility with minimal computational overhead.

Key Takeaways

These applications illustrate how PEFT methods enable the efficient and effective adaptation of large models across diverse domains. By reducing computational and memory requirements while maintaining performance, PEFT techniques make advanced models more accessible for real-world scenarios. From multi-modal instruction following to generative modeling, PEFT has proven its capacity to scale large models for specialized tasks without the resource demands of traditional fine-tuning.

6.1 Centralized PEFT Serving

Centralized Parameter-Efficient Fine-Tuning (PEFT) serving systems are designed to efficiently manage large language models (LLMs) in cloud-based environments, where high query throughput and optimal resource utilization are critical. These systems maintain a frozen backbone model and integrate multiple PEFT modules tailored for specific tasks. This modular approach enables serving diverse user requests without the computational overhead of full fine-tuning for each task, making centralized systems ideal for real-time, multi-task scenarios.

Challenges in Centralized PEFT Serving

Managing Multiple PEFT Modules:

Centralized systems must efficiently store, load, and switch between numerous PEFT modules, each specialized for a different task or domain.

This requires intelligent orchestration to handle diverse task requests seamlessly.

Efficient Query Processing:

High query throughput demands optimization of computation flows for both the frozen LLM backbone and the attached PEFT modules.

Techniques like operator batching and specialized scheduling algorithms are crucial for improving efficiency.

Resource Allocation and Scalability:

As user demand and task diversity grow, the system must scale dynamically.

Efficient allocation of computational resources (e.g., GPUs) and effective memory management are essential to prevent bottlenecks and ensure responsiveness.

Key Solutions

PetS [248]:

Addresses query processing challenges by separating computationally intensive matrix-vector multiplication (MVM) operations on the frozen LLM backbone from lighter adapter or LoRA computations.

Introduces a specialized scheduler that groups similar queries into batches, optimizing MVM operations.

This approach significantly enhances query throughput, enabling efficient handling of diverse PEFT tasks in real-time environments.

dLoRA [249]:

Tackles load imbalance in distributed setups where multiple workers process PEFT queries.

Dynamically merges and unmerges LoRA blocks across workers, ensuring balanced resource utilization.

This method improves overall system performance, particularly in scenarios with varying query loads, by preventing any single worker from becoming a bottleneck.

Advantages of Centralized PEFT Serving

Centralized PEFT serving systems focus on modularity, efficiency, and scalability, allowing multiple PEFT modules to operate concurrently with a shared backbone. By addressing challenges in query processing, resource allocation, and module management, these systems minimize latency, maximize resource usage, and ensure responsiveness for diverse applications. Innovations like PetS and dLoRA highlight the potential of centralized PEFT serving to make large-scale LLM deployments more practical, cost-effective, and adaptable to real-world demands.

6.2 Distributed PEFT Training

Distributed Parameter-Efficient Fine-Tuning (PEFT) strategies address critical challenges such as data privacy and computational efficiency, particularly when training involves geographically dispersed or resource-constrained environments. By distributing the fine-tuning process across multiple devices or servers, these frameworks enable scalable model adaptation while maintaining data security and minimizing resource demands.

Key Frameworks for Distributed PEFT Training

DLoRA [250]:

Retains the large model weights on a central cloud server while fine-tuning LoRA modules on edge devices.

Ensures data privacy by avoiding the transfer of raw user data to the central server, complying with data residency regulations and preserving user confidentiality.

The central server sends LoRA modules to edge devices for local fine-tuning using private data. Once updated, the LoRA modules are sent back to the server and integrated into the global model.

This approach is particularly advantageous for sensitive data scenarios, where sharing data externally is prohibited due to privacy or regulatory constraints.

Offsite-Tuning [251]:

Provides a compressed "emulator" of the full model, enabling data owners to train adapter modules locally on their infrastructure.

The emulator, a lightweight and less resource-intensive version of the complete model, allows data owners to fine-tune adapters without accessing or sharing the full model weights.

Protects the intellectual property of the model owner while reducing the computational burden on the data owner. Once trained, the adapter is sent back to the central server, where it is integrated into the full model.

This framework strikes a balance between performance and privacy, facilitating fine-tuning with a compressed model while ensuring data security.

Advantages of Distributed PEFT Training

Privacy Preservation: By keeping sensitive data localized and avoiding direct data exchange, distributed frameworks comply with privacy regulations and protect user confidentiality.

Resource Efficiency: Local fine-tuning on edge devices or compressed models minimizes the computational and memory demands on individual devices.

Scalability: These frameworks support large-scale collaborative fine-tuning across diverse environments, enabling broader deployment of large models in real-world applications.

Key Insights

Distributed PEFT training frameworks, such as DLoRA and Offsite-Tuning, represent significant advancements in adapting large-scale models for collaborative settings. By addressing data privacy, security, and resource constraints, these methods enable the scalable fine-tuning of large language models and other architectures in diverse, resource-limited environments. They highlight the importance of integrating algorithmic efficiency with system-level design to ensure practical, secure, and effective deployment of large models in real-world collaborative scenarios.

6.3 Parallel PEFT Training (Multi-PEFT)

As the adoption of Parameter-Efficient Fine-Tuning (PEFT) grows, there is an increasing demand to handle multiple fine-tuning tasks concurrently. Parallel PEFT training frameworks address this challenge by optimizing GPU utilization and managing concurrency effectively, particularly for scenarios where multiple adapters or LoRA tasks are executed simultaneously. These systems are critical for deploying large-scale, multi-tenant AI platforms, enabling multiple users or tasks to benefit from PEFT techniques without degrading performance or resource efficiency.

Punica: A Leading Parallel PEFT Framework

Punica [252] exemplifies state-of-the-art solutions for managing parallel PEFT tasks through the following innovations:

Identifying Repeated Operations:

Punica identifies repeated matrix-vector multiplication (MVM) operations within shared sub-graphs of different PEFT tasks.

These repeated operations are consolidated into single, batched calls, reducing redundant computations and improving overall efficiency.

Custom CUDA Kernels (SGMV):

Employs sparse-grouped matrix-vector multiplication (SGMV), custom CUDA kernels specifically designed for efficient execution of PEFT tasks.

These kernels leverage GPU parallelism to enhance the performance of matrix operations required for PEFT, ensuring faster and more efficient computations.

Multi-Tenant Scheduling:

Incorporates a dynamic multi-tenant scheduling approach, which allocates GPU resources across multiple concurrent tasks.

This strategy ensures balanced resource distribution, preventing any single task from monopolizing resources and maximizing GPU utilization while minimizing idle times.

Advantages of Parallel PEFT Training

Efficiency: By consolidating shared operations and using optimized kernels, frameworks like Punica enhance computational efficiency.

Scalability: Multi-tenant scheduling enables the system to handle numerous concurrent tasks, making it ideal for large-scale deployments in cloud-based or multi-user environments.

Resource Optimization: Dynamically allocates resources to ensure that all tasks progress efficiently, improving overall system throughput.

System-Level Implications

The advancements in parallel PEFT training, along with centralized and distributed PEFT systems, showcase the growing sophistication in scaling PEFT for real-world applications. These innovations address critical challenges, including resource allocation, data privacy, and task concurrency, ensuring that PEFT methods remain practical, efficient, and scalable for research and industry settings. By enabling efficient multi-task handling, parallel PEFT training frameworks like Punica play a vital role in the widespread adoption of PEFT across diverse domains and use cases.

7. Challenges and Future Directions

Parameter-Efficient Fine-Tuning (PEFT) has emerged as a transformative approach for adapting large-scale models efficiently. However, several challenges remain that need to be addressed to ensure PEFT methods are accessible, scalable, and practical for diverse applications. Below, we delve into these challenges and potential future directions in greater detail.

7.1 Hyperparameter Tuning

PEFT methods often rely on hyperparameters such as ranks in LoRA or bottleneck dimensions in adapter-based methods. These hyperparameters directly influence the trade-off between efficiency and task-specific performance. However, manually tuning these parameters is time-intensive and can lead to suboptimal configurations when dealing with new models or tasks.

Challenges:

Sensitivity to Hyperparameters: LoRA’s performance heavily depends on the choice of rank for its low-rank decomposition, while adapter-based methods require precise bottleneck dimensions for balancing efficiency and expressiveness. Hybrid methods, combining multiple PEFT approaches, are even more sensitive to hyperparameters.

Task-Specific Tuning: Hyperparameters tuned for one task may not generalize well to others, necessitating repeated optimization efforts.

Solutions:

Automated Hyperparameter Optimization: Efforts like NOAH [99] leverage Neural Architecture Search (NAS) and Bayesian Optimization to systematically identify optimal configurations. These methods reduce the need for expert intervention and enable adaptive tuning across tasks.

Default Configurations: Research into robust, task-agnostic hyperparameter defaults can streamline PEFT application, particularly for new users and resource-constrained environments.

Future Directions:

Comprehensive studies into the impact of hyperparameters across tasks and models to establish reasonable defaults.

Development of hybrid optimization techniques that can handle the interplay of hyperparameters in multi-strategy PEFT methods.

7.2 Unified Benchmarks

The absence of standardized benchmarks for PEFT evaluation is a significant barrier to progress. Current evaluations are fragmented, making it difficult to compare methods objectively or draw meaningful conclusions.

Challenges:

Fragmented Evaluations: Different methods are often tested on varying datasets, model sizes, and evaluation metrics, leading to inconsistent comparisons.

Lack of Reproducibility: Without standardized frameworks, replicating results across studies becomes challenging, hindering collaborative research.

Solutions:

Standardized Benchmarking Frameworks: Inspired by MMDetection [255] in computer vision, a unified PEFT benchmark would include:

Consistent datasets across NLP, vision, multi-modal tasks, and generative modeling.

Standardized evaluation metrics such as accuracy, efficiency (time/memory), and robustness.

Reproducible testing environments.